Creating a Solid SSIS ETL Solution: Part 3 – Package Template Overview

Creating a Solid SSIS ETL Solution: Navigation

Part 1. – Package and Error Logging

Part 1. – Package and Error Logging (cont.)

Part 2. – Package Configurations

Part 3. – Package Template Overview

Part 4. – Email Task and Package Execution Report

Ok so we’ve got our logging system set up as well as our package configurations. Now we can examine the template’s pieces with a good working knowledge of what we’re looking at.

Global Container

The first thing I want to ensure when I begin building a package is that it will somehow let me know it has completed or has errored out. I’ve heard many authors of articles mention how they don’t want to get email messages, but if you don’t have an operations department watching servers for you, how else are you to receive information that a specific job failed or a build didn’t work as intended? Personally I love the fact I can get an email from SSIS when a package completes or fails (depending on how critical the package is). No matter where I am I can receive important emails like this on my smart phone. If the data warehouse doesn’t build correctly I need to know about it immediately, especially if I’m on vacation.

In my package template I can set up the package so I get an email on completion, error, or on success (this last option is rather odd, but possible). This is done via the email task at the end of the package. Initially I tried to place this in the OnPostValidate or OnPostExecute handler (at the package level), but these handlers can fire more than once, so I found the handlers to be bad candidates for containing the email task. After many frustrating attempts to put the email task in an event handler, I decided to throw in the towel and just stick it at the end of the control flow.

In order to do this I set up a global sequence container, which contains every other task in the control flow. While this is an ugly approach, it ensures the email task will fire no matter the circumstance.

Package Level Start, End, and Error Logging

A similar approach is taken for package log start and finish tasks (Execute SQL tasks). Again I tried to place these in the OnPre/PostValidate or OnPre/PostExecute handlers, so they’d be virtually invisible to the package, but without success. It’s been awhile since I tried to stick them in there, but if I remember correctly those handlers are not the last things to be executed, and so some errors can be missed if the start and/or end are placed in them.

The first and last task in the global container starts and stops the package log at the package level. Initially the result type ID is set to 3 in case the package fails at the PackageLog Start task (if the log tables or sprocs are set up incorrectly, for example). Once the package log row has been inserted into the SSISPackageLog table via the PackageLog Start task and sproc, the package then enters the extraction container, the name of which is unimportant.

(Since creating this template a few months ago, I’ve found the extraction container to be rather worthless. It can be removed entirely. I will upload a new template to this series shortly.)

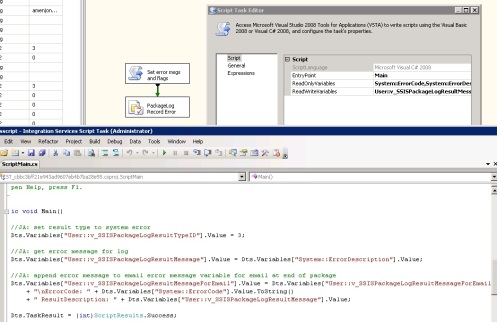

Now that the package log has been initiated, the best logging feature of the package has been activated: the error capturing capability of the template. At this point in package execution if an error occurs the on task failure handler will fire collecting the name of the task which failed, then the on error handler will fire changing the result type to 3 indicating system failure, inserting the ErrorDescription variable into the SSISPackageLogResultMessage variable, and collecting the error code and message in the SSISPackageLogResultMessageForEmail variable.

Once the error flags and messages have been set, the package will try to close out the detail and package log records. The on error handler will either try and close out the current open detail record in the SSISPackageLogDetail table with the error code and message associated or if a detail record has not been created it will create one, again appending the error code and message. While the error handler is closing out or creating the detail record, it will also append and end time to the record. After this the rest of the tasks will fail or be skipped, and the PackageLog End task will fire, closing out the package log with end time and failure indicated by package result type id 3.

If the package log start insert works as intended, the package variables are now initiated in the set package variables script. This particular package template is set up to ingest data from files on disk, so at this point the package will check to make sure files exist to be ingested. This package will fail if it counts more or less than 1 file in the target folder, but it can be modified to either fail if no files are found or continue processing regardless.

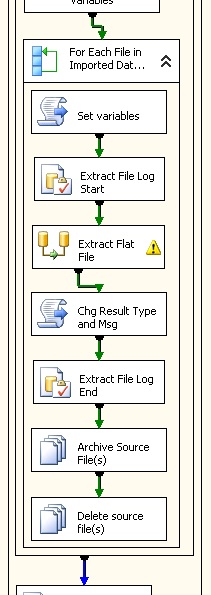

Detail Container

If the package log insert works as intended and files are found, the package then drops into the ForEach enumerating container (from here on out called the detail container). At this stage of package execution, I set up a separate detail container for each extraction, transformation, and/or load process. The container type is not important; the only thing that is important is each of these detail containers must contain the Set Variables, File Log Start, and File Log End tasks. The package I added to the sample files contains only one such container, but more can be appended by simply adding a new container with the set variables, start, and end tasks inside. For each new container add a workflow arrow from the previous container to the new container. Just remember to make sure your last container has an on completion workflow arrow to the PackageLog End task.

Inside the detail container the first thing the package comes across is the Set Variables task, which resets the detail level variables: detail result type id, result message, row counts, and in this particular case the archive and source file paths. These variables will obviously change depending on what kind of work you have the detail container doing.

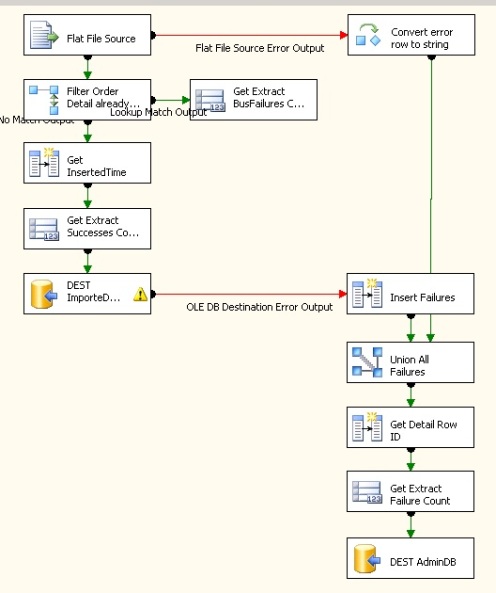

Inside the Data Flow

Once the detail log has been intiated, the control flow enters the data flow task. This is where success, business failure, and system failure record counts are collected as well as where erroneous data is funneled into the SSISPackageLogDetailErrorRecord table. As can be seen in the image below, a concatenation task is created at each juncture of the flow for which we expect a system failure may occur on the data being moved at that time. These tasks concatenate the original row contents, pass them to the union all task, they pass through the system error row counter, and then finally to the ErrorRecord table. This makes it extremely easy to figure out which rows failed conversion, and then by examining the concatenated row data, why the row failed out of the flow. My rule of thumb here is if I think a row has a chance to fail to flow through a task, I pass the failure rows over to the failure collector. It’s so much easier to correct an ETL process when you have the exact data which failed to convert or insert, etc.

One part of the data flow, which can be tricky, is row counting. As you can see in the image and package if you downloaded it is I count the successes and then allow some insert failures to flow over to the error recording side of the data flow. This actually could cause a row to be double counted if it fails the final insertion. In this particular package it is not extremely important to capture the exact counts, but in some packages I’ve created since this template the counts have had a higher priority. To solve the dilemma of double counting rows I do a pre-process row count in a separate data flow before the main flow, and then subtracted the error counts from the pre-process total count to obtain the success count (this is done in the ‘Chg Result Type and Msg’ task). This way my final counts always add up correctly.

Archive and Delete File Tasks

Since this particular template extracts data from files, after the data flow is complete the package archives the file (copy) and then deletes the file (delete). These tasks have been set up with dynamic paths, which were captured at the beginning of the ForEach enumerator in the Set Variables task. This makes it very easy to keep an archive of files in case you need to look at the source file or re-process the file entirely.

One thing of note. Recently I noticed when trying to delete Excel files post processing, the files would be locked from being opened; therefore they could not be deleted. I solved this problem by moving the Archive task to just after the Set Variables task, and then reading from the archived file. This allowed the main file to be deleted as it had no read lock on it. It had only been copied; not opened.

Next: Email Notifications and Package Execution Report

Well that explains the majority of the package template I’ve created. If there are any questions on particular details please comment, and I’ll try and answer your questions.

See you in two weeks when I explain the dynamic email task in more detail along with an SSIS Execution Report I created in SSRS. Expect the post on or before Jan 10th 2011! Have a happy new year! ==>

Great stuff but wondering if you can provide any insight into use of propogate or other means of disabling OnPostExecute for child packages?

Also – note that error code & message do not show as variables unless the context is the Event Handler tab. That threw me for an endless loop at first – thought it was deprecated variables from earlier version as didn’t see them on control flow variables and couldn’t put watch on them.

Exactly what I was thinking. The error code and message are actually not available in all handlers. I think they’re only available in the OnError handler, which makes them even more annoying. The only way to capture the exact message is via a script in the OnError handler.

As far as your query about disabling OnPostExecute for child packages it is indeed possible. You must set up a variable to hold the parameter coming from the parent (TRUE or FALSE for disabled), then set up a package configuration in the child which will use a parent variable to set the value of the variable you just created in the child. Once this relationship is set up, go to the OnPostExecute handler in the child. In the properties, open the Expressions UI (click the ellipsis button). In the property drop down select ‘Disable’. For the expression side, select the variable you created in the beginning.

Hope this helps.