Does android battery suck, or is it usage? In short it does suck compared to other popular alternatives(the i range). However the purpose of this post is not to start a witchhunt or rant but to arm you with tools to debug your battery usage. I will help you track down your battery hog(s) and then suggest some methods to tackle them.

There are 4 main causes of battery hogs:

- Cell Phone Usage

- Display

- CPU usage

- Sensors like (GPS, Wifi etc)

Lets start with the easy one:

Cell Phone Usage

Cell tower is the one that provides you connectivity, EDGE and 3G. Unfortunately weak signal can cause this to use exorbitant amount of battery. If you are experiencing full battery drain in about 2-3 hours then this is most probably the culprit. First thing to do is go Settings -> About Phone -> Battery Use -> Cell Phone standby. Look @ Time spent without signal. Any number other than 0 is very dangerous. There are no practical solutions to this problem. Search for network and fix it. This will prevent phone from searching network when signal is weak. However this may not work when you go on roaming and if you spend most time out of signal this will still consume battery.

Display

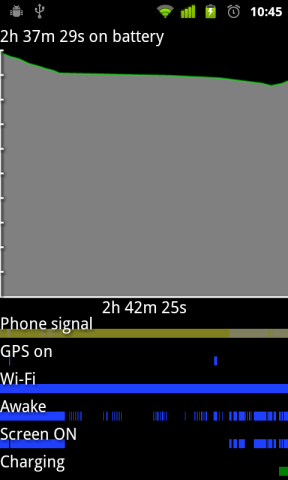

The only downside of having a large screen is that it consumes exponential charge. If you using an LCD screen, your phone is only likely to survive 4-5 hrs of screen on time. This is assuming that you are not performing any CPU intensive task during that period like gaming, video etc. It is highly likely that you will consume 4 hrs of usage out of your phone in the entire day hence its unlikely that your phone will survive more than a day. The only aspect to debug here is if some freaky software or buggy ROM is keeping your screen up. Gingerbread provides you clean interface to check your screen on period. You can use Juice Plotter on non gingerbread phones but it has been known to consume a lot of battery itself. Make sure you only use to to debug your screen and not on a regular basis.

Have a look at your Screen ON time and make sure you are getting around 4 hrs of usage. Any thing less than that means that you have issues with your battery. Look through the CPU Usage and Sensor usage area debug those issues.

Solutions:

- Choose an AMOLED screen phone: AMOLED screens consume very little battery in comparison to LCD Screens.

- If you have an AMOLED screen then choose darker themes while browsing/surfing/wallpapers. A light color pixel consumes about 5.8 times more energy than dark pixel. This method will not work for LCD phones. You can see the impact of changed wall paper using Current Widget. This will tell you how much charge your phone is consuming(wait for couple of min for it to refresh when you change the wall paper).

- Choose lower screen brightness in general. Rely on Power control widgets to increase screen brightness when required.

- Reduce display timeout to 30sec-1min. Adjust that to a time that is comfortable to you.

CPU usage

- Launch Spare Parts. Fortunately this is bundled in Gingerbread phones, for Froyo you can try out this market app https://market.android.com/details?id=com.androidapps.spare_parts and hope it will work.

- Look at Battery history inside Spare Parts. Select “Other Usage” in first drop down.

- Check out Running time vs Screen On time.

- Launch Spare Parts

- Look at battery history and select “Partial Wake Usage”

- Now you will see which app is the culprit and eating up CPU.

- Use less push sync in general. True push is only available with Blackberry everybody else is doing a very frequent pull to emulate push, so make sure you don’t use push where its not required.

- In my opinion disable auto sync all together and do a manual sync when needed. You can put the auto sync button in your power widget and flick it on and off whenever needed

- Use Juice Defender it will give you good usage patterns(disabling sync at night etc).

- Your CPU scales slowly(Conservating scaling/Power intensive), especially when you have very little battery left

- Reduce highest CPU frequency to something like 700 Mhz especially during the night or when the screen is off

Sensor usage

- Disable both ways to determine your location in Settings => Location and Security. If you really need location then go for “use wireless location” instead of GPS for location. Use GPS only in critical situations where precise location is very necessary

- Change Wifi sleep policy (in Settings => Wireless and Networks => Wifi Settings => Menu key => Advanced => Wifi Sleep policy => When screen is off) This will switch off your wifi and turn on mobile network when you are not using the screen. This way your background apps can use your slow mobile network to sync while saving wifi juice.

- Turn on “Only 2G network” in (Settings => Wireless and Networks => Mobile Networks) . This will switch off your 3G network and use only 2G which uses much less battery and is much slower. You can also use 2G/3G switcher in power widget to control this if you rely on 3G for browsing.

Recent Comments